November 6, 2023

In the current educational landscape, school trustees face a distinct and challenging environment characterized by political maneuvering and persistent change, largely influenced by the recent pandemic. The educational system's shockwaves stemming from COVID-19 have led to far-reaching consequences, including learning setbacks, heightened social divisions, and exacerbation of pre-existing disruptions in school governance (Bonal & Gonzalez, 2020).

Notably, the impact of the pandemic on learning in Texas schools was particularly significant due to unpreparedness for remote learning options during the spring of 2020, resulting in a learning loss of up to two months, with even more severe setbacks experienced in schools serving high-poverty communities (Patarapichayatham et al., 2021).

Extensive research consistently underscores the pivotal role of teachers in the classroom as the primary influencers on student outcomes (Arby et al., 2016; Bartoletti & Connelly, 2013; Dennie et al., 2019; Nairz-Wirth & Feldmann, 2017). However, given the tumultuous state of today's educational landscape, the future success of students hinges largely on governance teams assuming a prominent role within their respective school districts. These teams must establish a robust framework and offer unwavering support to drive student achievement, a role that has been crucial in the past but has now become even more pronounced.

In today's dynamic educational landscape, the foundation of a robust governance structure is indispensable for ensuring positive student outcomes and the resilience of school systems in the face of evolving challenges. As demonstrated by the research of Rice et al. (2000), the focus and effectiveness of school governance directly impact student achievement.

This exploratory study was conducted to gain insight into the efficacy of the Texas Education Agency's (TEA) performance management governance initiative, which centers on guiding boards of trustees to implement the best practices outlined in their Framework of School Board Development. The research methodology encompassed interviews with nine superintendents using a semi-structured approach, a Likert-type survey administered to 48 board members, and a comprehensive analysis of student outcomes. The primary objective was to gauge the effectiveness of the Lone Star Governance (LSG) initiative.

The feedback obtained from the superintendents and the subsequent analysis consistently reinforced previous research findings, affirming that a notable improvement in student outcomes occurs when the best practices outlined in the framework are faithfully implemented. Notably, the results revealed a substantial increase in state accountability gains when comparing the intervention group to the control group.

Moreover, campuses whose boards engaged with a governance coach demonstrated a modest uptick in student outcomes when compared to those without coaching support. Insights gleaned from both superintendent interviews and board surveys underscored the pivotal role played by these coaches in ensuring the accountability of implementation. Significantly, the magnitude of growth observed was more pronounced among low-performing campuses than their non-low-performing counterparts. Once again, consensus emerged from superintendent interviews and board surveys, dispelling the notion that the initiative exclusively targeted low-performing campuses; instead, it was recognized as a performance management tool to foster continuous improvement.

However, it is crucial to acknowledge the limitations inherent in the size of the research population. To gain a more comprehensive understanding of the intervention's complete benefits, further research is imperative. While descriptive statistics offer valuable insights for practical applications within the school context, robust correlational research holds the potential to unveil the most effective tools within the LSG framework for enhancing student outcomes.

Methods and Data

To learn more about how governance teams perceive LSG and the impact on student outcomes beyond the analysis of accountability gains, the participating superintendents were asked to engage in a semi-structured interview, and the board members were invited to complete a Likert-type survey.

To better understand the implementation of LSG, the following questions have guided this study:

1) Does LSG improve student outcomes through research-based board behaviors identified as the five pillars in the Framework for Board Development? What is the role of the coach in LSG implementation?

2) Is LSG only beneficial to low-performing schools?

The study encompassed ten school districts, with nine out of these districts engaging in coaching services provided by the region's LSG coaches. Six of these nine commenced their LSG implementation during the 2021-2022 school year. The remaining three districts participated in board surveys and interviews but were excluded from the accountability analysis focused on determining improvements in student outcomes.

The initial intervention involved implementing a two-day training program based on LSG principles to instill an understanding of behaviors that influence student outcomes within school districts. Subsequently, the second intervention introduced coaching to ensure the effective execution of governance behaviors discussed during the training. These interventions were systematically tested using the Plan, Do, Study, Act (PDSA) model, a widely accepted protocol in the realm of school improvement, known for its continuous cycle of reflection and adaptation (Bryk et al., 2015).

The Likert-type survey delved into board members' perceptions of LSG, the efficacy of behavior change, and the establishment of processes. It consisted of fifteen questions, rated on a scale from 1 (strongly disagree) to 5 (strongly agree). Superintendent interviews were conducted post-implementation of progress monitoring, either face-to-face or via Zoom, without recording devices. Eighteen open-ended questions were posed, covering topics ranging from the effectiveness of LSG to the need for coaching and the changes in board behaviors. The interview notes were subsequently coded and categorized into themes, with validation by other LSG coaches to ensure data integrity.

An analysis was planned using linear regression, involving factors such as time tracked in board meetings, board self-evaluation scores, and accountability scores. However, the study population required expansion to yield more reliable results. Similarly, exploratory factor analysis for the Likert-type survey was intended to identify potential survey factors, yet again, a larger sample size was needed for accuracy.

The research encountered limitations primarily stemming from the small population size, which resulted in non-normal data distribution, constraining the extent of statistical analysis. Consequently, descriptive statistics were employed for quantitative analysis. Despite these limitations, this article presents the analysis results, leveraging quantitative and qualitative methods to corroborate findings and address misconceptions regarding LSG as a continuous improvement model applicable to all schools.

Results

Two-day LSG Intervention

The study set out to assess the efficacy of LSG in improving student outcomes by examining the impact of changes in school board behaviors. Additionally, the study aimed to gain a deeper understanding of the role played by coaches in the implementation process and identify the schools that stand to benefit most from adopting LSG. To ensure consistency in implementing the LSG process, particularly the coaching component, the research was conducted within a single regional education service center rather than being spread across the entire state. While this approach promoted uniformity in implementation, it also resulted in a limited study population, which posed challenges in analyzing the results.

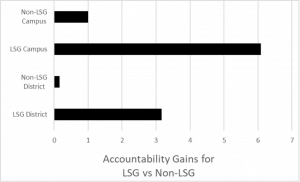

Despite the small and constrained study population, descriptive statistics provided compelling evidence of improved student outcomes in schools that participated in LSG compared to a sample of districts that did not participate in the initiative. Figure 1 illustrates results that align with the findings of Rice et al. (2000) as outlined in the Light House Study. Notably, LSG campuses exhibited a mean gain of 6.07 accountability points (SD = 10.08), whereas their counterparts recorded a much lower mean gain of 1.00 accountability points (SD = 8.13). Furthermore, LSG districts experienced a mean accountability point increase of 3.16 (SD = 6.96), in stark contrast to their non-LSG counterparts, who achieved a mere mean gain of 0.16 (SD = 5.84) accountability points.

Figure 1

Comparison of 2019 Accountability Scores to 2022 Accountability Scores for LSG versus Non-LSG Participants

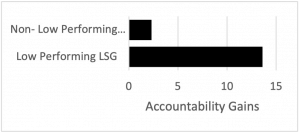

To delve deeper into the impact of LSG on underperforming schools, this study scrutinized the accountability point gains of low-performing LSG campuses in contrast to their non-low-performing counterparts. For the purposes of this investigation, the criteria for identifying low-performing schools were based on a campus receiving a D or F rating, aligning with the rationale behind the inception of LSG, as articulated by Crabill (2017). Out of the total of 39 campuses included in the study, thirteen were classified as low-performing.

A substantial disparity emerged when comparing the accountability gains of these low-performing campuses to those holding ratings within the A to C range. On average, the low-performing campuses exhibited an impressive gain of 13.62, while their counterparts only recorded a modest 2.31 gain. This marked an 11.31-point difference in the mean between the two comparison groups. Figure 2 graphically illustrates the mean differential observed across all the low-performing and non-low-performing campuses in the study.

It's important to acknowledge that the study's outcomes were influenced by the relatively small sample size, which posed limitations on the depth of analysis. Therefore, further research is warranted to delve more extensively into this connection and uncover a more nuanced understanding of its implications.

Figure 2 Comparison of 2019 Accountability Score Gains to 2022 Accountability Score Gains for LSG Low Performing Campuses versus Non-Low Performing LSG Campuses

LSG was conceived to enhance student outcomes, particularly to mitigate the risk of schools facing heightened accountability measures imposed by legislative mandates (Crabill, 2017). As illustrated in Figure 2, this objective is substantiated by the findings of this study. However, Figure 2 also reveals a noteworthy trend: low-performing campuses experienced a considerably more significant gain in accountability points following their participation in LSG, in contrast to their non-low-performing counterparts.

It is essential to note that the additional emphasis on improvement does not imply that a school must be classified as low-performing to engage with LSG. Instead, it underscores the notion that any school, regardless of its current performance level, can benefit from LSG if there is a genuine commitment to progress and enhancement.

LSG Coaching Intervention

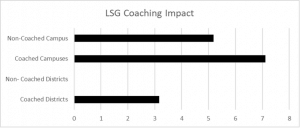

Instructional coaching has gained prominence recently and boasts a well-documented track record of improving student outcomes (Bruns, 2018; Chen, Chen, & Tsai, 2009; Knight, 2007; Mudzimiri et al., 2014; Passmore, 2010;). The learning setbacks brought about by the COVID-19 pandemic have underscored the imperative for school boards to sharpen their focus on student outcomes. As depicted in Figure 1, the enhancement of student outcomes through a governance perspective is evident. However, to comprehensively assess the full impact of LSG, the hypothesis was posited that, akin to instructional coaches, governance coaches could contribute to improving student outcomes from the vantage point of the boardroom. Consequently, the second Plan, Do, Study, Act (PDSA) model was implemented in nine out of ten districts within the study that opted to engage in governance coaching.

Figure 3 shows the disparities in student outcomes between campuses and districts that received coaching and those that opted not to implement coaching support. The districts that embraced coaching exhibited an average gain of 3.8 accountability points (SD = 7.59), starkly contrasting to the non-coached comparison group, which registered no gain (0). Notably, only one district opted out of the coaching intervention, and this district recorded zero accountability point gains at the district level.

Figure 3 LSG Coaching Impact on Student Outcomes

In the case of the non-coached district, it's worth noting that it comprised several campuses. Consequently, the number of campuses was more evenly balanced when comparing coached versus non-coached campuses. The results indicate a mean accountability gain of 5.19 (SD = 8.38) for non-coached campuses compared to 7.11 (SD = 11.94) for coached campuses when assessing the accountability point gains from 2019 to 2021. This underscores the necessity for further research to elucidate the role of coaches in the implementation process.

Board Member Surveys

To understand the overall perception of LSG implementation, a survey was administered to school board members from the participating districts. The survey was meticulously crafted to address key research inquiries post their engagement in the two-day training and governance coaching. The survey included questions about the impact of LSG on student outcomes, the influence of governance coaches, and the board members' perspectives regarding whether LSG is exclusively tailored for low-performing districts.

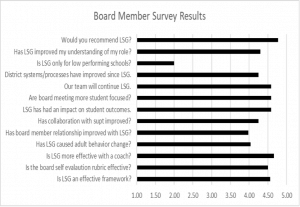

Regrettably, the population size proved insufficient to facilitate a statistical analysis, primarily due to deviations from the assumptions required for a normal data distribution. Consequently, descriptive statistics were employed to assess the survey findings. Figure 4 succinctly presents the means of the survey results corresponding to the research questions.

Figure 4

Board Member Survey Results for LSG

Figure 4 offers a visual representation of the mean responses provided by board members in their surveys. These responses shed light on the profound impact of LSG on board members' perspectives.

Addressing the first research question regarding LSG's influence on behaviors through the Texas State Board of Education’s Framework for School Board Development, the survey results underscore the transformative effect of LSG. Board members reported an enhanced understanding of their roles, registering an average score of 4.29 (SD = 0.85). Furthermore, LSG empowered them to fortify district systems and processes, as reflected in a mean score of 4.25 (SD = 0.61). Importantly, LSG brought a heightened focus on student outcomes, garnering an average score of 4.58 (SD = 0.57), with an impressive mean of 4.58 (SD = 0.57), indicating that members believe in, or have already witnessed, improvements in this regard. Additionally, teamwork and synergy, integral aspects of the Texas State Board of Education’s Framework for School Board Development, also thrived with LSG implementation. Collaboration with superintendents improved significantly, as indicated by a mean score of 4.25 (SD = 0.83), and relationships among board members grew stronger, with an average score of 3.98 (SD = 0.78). The survey confirmed that board members believed their behaviors had changed due to LSG, earning a mean score of 4.04 (SD = 0.65), and they credited the integrity instrument for facilitating this change, with a mean score of 4.50 (SD = 0.65).

The LSG project intervention centered around coaching, and the research aimed to gauge its effectiveness. Drawing a parallel with instructional coaching, known to improve outcomes, skills, and collaboration (Denton et al., 2009; Kraft et al., 2016; Spelman et al., 2016), the survey results indicate strong agreement among board members regarding the effectiveness of governance coaches, with a mean score of 4.66 (SD = 0.52).

Finally, addressing the persistent misconception that LSG is solely intended for low-performing schools and not recognized as a continuous improvement model, the board member survey results provide clarity. When asked whether LSG was designed exclusively for low-performing schools, the overwhelming response was disagreement, with a mean score of 2.00 (SD = 1.14). Instead, board members strongly recommended LSG to other districts, with a mean score of 4.77 (SD = 0.47), underlining their conviction that LSG is an effective tool for governance teams, earning a mean score of 4.56 (SD = 0.68).

Superintendent Interviews

The qualitative segment of this mixed methods research assessment entailed interviews with nine superintendents representing the ten districts under study. After a comprehensive analysis of their interview responses, several prominent themes emerged, providing valuable insights into the superintendents' perspectives regarding LSG training. These themes encompassed:

Understanding of Board's Role: Superintendents noted that LSG training significantly enhanced their understanding of the board's role in the educational landscape.

Academic Focus: LSG underscored the paramount importance of an academic focus, aligning with the core mission of the districts.

Vision Enhancement: LSG played a pivotal role in developing a more robust and visionary approach.

Value in Coaching: Superintendents recognized the substantial value of coaching in the LSG implementation process.

Improved Board/Superintendent Relations: LSG was instrumental in fostering improved dynamics and relationships between the board and the superintendent.

Principal Support: An unexpected but noteworthy discovery was the support extended to principals, with 55% of superintendents attributing this to LSG coaching sessions, a component of this study's intervention.

Student-Centric Approach: LSG instilled a clear and unwavering focus on students' well-being and academic outcomes.

Progress Monitoring: A substantial 89% of superintendents emphasized the critical role of progress monitoring within LSG, as it played a pivotal role in refocusing their districts toward achieving their goals.

Building Board Capacity: LSG was found to be instrumental in enhancing the capacity of the board.

Improved District Systems: Superintendents reported tangible improvements in district systems, translating into more effective operations.

Furthermore, when superintendents' responses were aligned with the research questions, it became evident that LSG closely adhered to the Texas State Board of Education’s Framework for School Board Development. An astounding 100% of interviewed superintendents unanimously expressed that LSG offered clear guidance on the roles of the board and superintendent. It also facilitated conversations centered on student outcomes and played a transformative role in shaping their vision and goals.

Additionally, 78% of superintendents identified improvements in board/superintendent relationships and enhancements in board meeting planning.

Lastly, the coaching impact of LSG was also assessed through interviews with superintendents. Notably, 100% of superintendents affirmed that LSG coaching significantly contributed to successfully implementing LSG best practices. Figure 5 provides a glimpse into some of the comments made by superintendents regarding governance coaching.

Figure 5

Superintendent Perspective on LSG Coaching

- “Monthly coaching sessions are key to success.”

- “Coaching keeps the board focused because they know someone is holding them accountable.”

- “As a new superintendent, LSG coaching conversations have sped up the relationship between me and my board.”

- “I do not know how LSG could be done without a coach - due to planning, calibration, self-evaluation clarity, and focus.”

- “Without LSG coaching, old habits will creep back in.”

Discussion/Conclusion

Frequently, governance teams tend to focus on district-level initiatives while overlooking the critical aspects that require attention within the boardroom itself. The foundational research provided by the Lighthouse Study (Rice et al., 2000) underscored a fundamental truth: school boards that prioritize student outcomes invariably witness improvements in those outcomes.

Despite the limitation imposed by the relatively small population size, compelling evidence emerges from various sources, including gains in mean accountability scores, insights gleaned from the school board survey, and revelations from superintendent interviews, all of which affirm the positive impact of LSG on student outcomes. This enhancement in outcomes is achieved through the implementation of best practices meticulously outlined in the Texas State Board of Education’s Framework of School Board Development.

The collaborative and relationship-building components emphasized in LSG training address critical needs faced by many governance teams across the state, grappling with the complexities of political and community issues that directly affect both the board and, ultimately, the students. It's essential to underline that while LSG was initially developed to elevate or safeguard districts from low-performance status, this does not negate the importance of implementing best practices at all levels within the educational system.

The prevailing misconception that LSG is exclusively tailored for low-performing schools is unequivocally debunked by this study. While it is evident that low-performing campuses experience substantial gains when implementing LSG, there are also noteworthy improvements observed in non-low-performing campuses. Many of the responses from board members' surveys and insights derived from superintendent interviews reaffirm the positive impact on accountability mean gains following LSG implementation.

However, the most notable finding of this research study centers around the pivotal role of governance coaching. It becomes abundantly clear that implementing best practices hinges on the fidelity achieved through coaching. This assertion is reinforced by the data on accountability gains and insights from superintendent interviews. It underscores the fact that true behavioral change within governance teams only transpires when it is accompanied by the accountability and support intrinsic to coaching. Attending the two-day training may foster productive conversations and a sense of collaboration but falls short of facilitating lasting behavioral transformation. Such transformation can only be achieved through ongoing coaching and the integration of best practices into day-to-day operations. This process involves a continuous dialogue to identify what works well and where improvements are necessary.

Recommendations for Future School Improvement

To enhance future school improvement initiatives, there is a compelling need to establish a more robust coaching model for LSG implementation at the district and campus levels. Currently, the Effective School Framework is a guiding beacon for campus-level improvements through Texas Instructional Leadership. In parallel, the development of an Effective District Framework is underway at the Texas Education Agency, fostered through a community-of-practice model involving education service centers and district leaders.

Crucially, forging a partnership with the custodians of the LSG initiative becomes imperative to establish a seamless connection with the forthcoming Effective District Framework. Such collaboration has the potential to bridge the existing training gaps, not only fortifying the existing coaching model but also dispelling any lingering misconceptions that LSG is exclusively designed for low-performing schools.

This collaborative effort promises to usher in a more comprehensive and inclusive approach to school improvement, where the synergy between LSG and the Effective District Framework can amplify their collective impact, benefitting a broader spectrum of educational institutions and furthering the cause of improved student outcomes.

Recommendations for Future Research

Further research is essential to expand the population size, enabling a more comprehensive analysis of the impact of LSG on enhancing student outcomes. While descriptive statistics offer valuable insights for practical applications within the educational context, a robust correlational research approach holds the potential to furnish a deeper understanding of the most effective tools and strategies within the LSG framework.

This extended research endeavor should encompass a more diverse range of educational settings, thus yielding a broader and more nuanced perspective on the tangible benefits of LSG. By employing rigorous correlational research methodologies, we can pinpoint the specific LSG components that wield the greatest influence on student outcomes, ultimately fostering evidence-based decision-making and more effective educational practices.

References

Abry, T., Rimm-Kaufman, S., & Curby, T. (2016). Are all program elements created equal? Relations between specific social and emotional learning components and teacher–student classroom interaction quality. Prevention Science, 18(2), 193-203.

Bartoletti, J., & Connelly, G. (2013). Leadership matters: What the research says about the importance of principal leadership. National Association of Secondary School Principals and National Association of Elementary School Principals. https://www.naesp.org/sites/default/files/LeadershipMatters.pdf

Bonal, X., & González, S. (2020). The impact of lockdown on the learning gap: family and school divisions in times of crisis. International Review of Education, 66(5-6), 635-655

Bruns, B., Costa, L., & Cunha, N. (2018). Through the looking glass: Can classroom observation and coaching improve teacher performance in Brazil? Economics of Education Review, 64, 214-250.

Bryk, A., Gomez, L., Grunow, A., & LaMahieu, P. (2015). Learning to improve: How America’s schools can get better at getting better. Harvard Education Press.

Chen, Y., Chen, N., & Tsai, C. (2009). The use of online synchronous discussion for web based professional development for teachers. Computers & Education, 53(4), 1155-1166.

Crabill, A. (2017). Lone Star Governance. Board Leadership, 2017(153), 6-7.

Dennie, D., Acharya, P., Greer, D., & Bryant, C. (2019). The impact of teacher-student relationships and classroom engagement on student growth percentiles of 7th and 8th grade students. Psychology in the Schools, 56(5), 765-780. https://doi-org.ezproxy.uttyler.edu/10.1002/pits.22238

Denton, C. A., & Hasbrouck, J. (2009). A description of instructional coaching and its relationship to consultation. Journal of Educational and Psychological Consultation, 19(2), 150-175.

Knight, J. (2007). Instructional coaching: A partnership approach to improving instruction. Thousand Oaks, CA: Corwin Press.

Kraft, M. A., Blazar, D., & Hogan, D. (2016). The effect of teacher coaching on instruction and achievement: A meta-analysis of the causal evidence. Review of Educational Research, 88(4), 547-588.

Mudzimiri, R., Burroughs, E. A., Luebeck, J., Sutton, J., & Yopp, D. (2014). A look inside mathematics coaching: Roles, content, and dynamics. Education Policy Analysis Archives, 22(53).

Nairz-Wirth, E., & Feldmann, K. (2017). Teachers’ views on the impact of the teacher-student relationships on school dropout: a Bourdieusian analysis of misrecognition. Pedagogy, Culture & Society, 25(1), 121-136. https://doi-org.ezproxy.uttyler/10.1080/14681366 .2016.1230881

Passmore, J. (2010). A grounded theory study of the coachee experience: The implications for training and practice in coaching psychology. International Coaching Psychology Review, 5(1), 48-62.

Patarapichayatham, C., Locke, V. N., & Lewis, S. (2021). COVID-19 learning loss in Texas. Istation: Dallas, TX, USA.

Rice, D., Delagardelle, M.L., Buckton, M., Jons, C., Lueders, W., Vens, M.J., Joyce, B., Wolf, J., & Weathersby, J. (2000). The lighthouse inquiry: School board/superintendent team behaviors in school districts with extreme differences in student achievement. https://pdfs.semanticscholar.org/1a17/5f1a9c65712 a0de98ef80480668036b06be9.pdf?_ga=2.40299559.401498268.1574903128-2028656576.1574903128

Spelman, M., Bell, D., Thomas, E., & Briody, J. (2016). Combining professional development & instructional coaching to transform the classroom environment in PreK-3 classrooms. Journal of Research in Innovative Teaching, 9(1), 30-46.

Dr. Morris Lyon, Executive Director

Region 3 Education Service Center

He can be reached by phone at 979-229-5466 and by email at mlyon@esc3.net.